Velcro

Velcro, a widely used fastening system, has an interesting history that dates back to the mid-20th century. The concept of Velcro was developed by Swiss engineer George de Mestral, who was inspired by nature.

Velcro, a widely used fastening system, has an interesting history that dates back to the mid-20th century. The concept of Velcro was developed by Swiss engineer George de Mestral, who was inspired by nature.

In 1941, de Mestral went for a walk in the Swiss Alps and noticed how burdock burrs clung to his clothing and his dog's fur. Intrigued by this natural mechanism, he decided to examine the burrs under a microscope. De Mestral discovered that the burrs had small hooks that caught on the loops of fabric and fur, leading him to envision a similar system for fastening.

Over the next decade, de Mestral worked on developing a synthetic version of this natural fastening mechanism. His key breakthrough came in 1955 when he patented his invention, which he named "Velcro" – a combination of the French words "velours" (velvet) and "crochet" (hook). The Velcro system consists of two components: one side with tiny hooks and the other with small loops.

Velcro initially faced skepticism and resistance from industries, but its versatility and convenience soon gained widespread acceptance. The material found applications in various fields, including clothing, footwear, aerospace, and medicine. It became particularly popular in the space industry due to its ability to securely fasten objects in a zero-gravity environment.

Velcro gained global recognition during the 1960s and 1970s as it became a staple in the manufacturing of clothing, bags, and a wide range of products. The ease of use and durability of Velcro contributed to its success, making it a preferred alternative to traditional fastening methods like zippers and buttons.

Since its invention, Velcro has undergone several improvements and variations to suit different needs. Today, it is an integral part of everyday life, used in various applications ranging from children's shoes to medical devices. The history of Velcro showcases how a simple observation in nature can lead to groundbreaking inventions with widespread implications.

The Electronic Game: Simon

"Simon" is a classic electronic memory game that was first introduced in 1978 by Ralph H. Baer and Howard J. Morrison.

"Simon" is a classic electronic memory game that was first introduced in 1978 by Ralph H. Baer and Howard J. Morrison. The game has since become an iconic and enduring toy, challenging players of all ages to test and improve their memory and concentration. In this report, we will explore the history of "Simon," its gameplay, and its impact on popular culture.

"Simon" was developed by Baer and Morrison under the Milton Bradley Company. The game was inspired by an early computer memory game called "Touch Me." The name "Simon" is derived from the popular children's game "Simon says," and it symbolizes the game's simple yet challenging premise.

Gameplay:

"Simon" consists of a circular device with four colored buttons: red, blue, green, and yellow. The game is designed to test players' memory and concentration. The gameplay can be summarized as follows:

1. The device generates a random sequence of colors by lighting up the buttons in a specific order.

2. The player's objective is to replicate the sequence correctly by pressing the buttons in the same order.

3. If the player successfully replicates the sequence, the game adds an extra step to the pattern.

4. The player's goal is to continue repeating the growing sequence for as long as possible without making a mistake.

5. As the game progresses, the sequences become longer and more challenging.

Impact on Popular Culture

"Simon" quickly became a cultural phenomenon after its introduction. It garnered widespread popularity and recognition and became synonymous with the concept of memory and pattern recognition games. The game has had a lasting impact in the following ways:

1. Iconic Design: "Simon" is known for its distinctive design, with its circular shape and brightly colored buttons. It has become an iconic representation of classic electronic games.

2. Enduring Appeal: Despite being released over four decades ago, "Simon" continues to be enjoyed by people of all ages. Its simple yet addictive gameplay has contributed to its enduring appeal.

3. Educational Value: "Simon" is often used as an educational tool to improve memory, cognitive skills, and concentration, particularly in children.

4. References in Popular Culture: "Simon" has made appearances in various forms of media, including movies, television shows, and literature, cementing its place in popular culture.

5. Ongoing Variations: Over the years, different versions and adaptations of "Simon" have been released, catering to modern audiences with updated features and aesthetics.

"Simon" is a classic electronic memory game that has left an indelible mark on popular culture. Its simple yet challenging gameplay has appealed to generations of players, and its iconic design continues to make it a recognizable symbol of classic electronic gaming. The enduring popularity and educational value of "Simon" highlight its significance in the world of games and entertainment.

Operation Eagle Claw

Operation Eagle Claw was a failed United States military operation that took place in April 1980. Its primary objective was to rescue 52 American hostages held at the U.S. Embassy in Tehran, Iran, following the 1979 Iranian Revolution. The operation was officially named Operation Rice Bowl but is better known as Eagle Claw.

Here is a summary of the key details and findings from the Operation Eagle Claw report:

1. Objective: The primary objective of Operation Eagle Claw was to rescue the American hostages held at the U.S. Embassy in Tehran and bring them back to the United States safely.

2. Planning and Execution: The operation was complex and involved various military units, including elements from the U.S. Army, Navy, Air Force, and Marine Corps. It was planned and executed under the overall command of Operation Commander General James B. Vaught.

3. Desert One: The operation involved a staged deployment to a location known as Desert One in Iran's Great Salt Desert. This staging area was intended to be a refueling point for the helicopters involved in the mission. However, due to various issues, including weather conditions, mechanical failures, and a collision between two aircraft, the mission was aborted at Desert One.

4. Hostages: Unfortunately, the mission's failure led to a loss of life, with eight American service members killed during the accident at Desert One. Additionally, it was a significant setback for the U.S. government's efforts to secure the release of the hostages. The hostages remained in captivity for a total of 444 days.

5. Aftermath: The failure of Operation Eagle Claw had several consequences, including the resignation of several high-ranking military officers. It also led to a reevaluation of U.S. military capabilities and a focus on improving special operations forces, which eventually led to the creation of the U.S. Special Operations Command (USSOCOM).

6. Lessons Learned: The operation highlighted the need for better coordination among military services and agencies and the importance of thorough planning and preparation for complex missions. It also emphasized the need for dedicated special operations forces capable of executing high-risk missions.

7. Diplomatic Resolution: Ultimately, the hostages were released on January 20, 1981, shortly after the inauguration of President Ronald Reagan. Their release was the result of months of negotiations between the U.S. government and the Iranian government.

Operation Eagle Claw remains a significant event in U.S. military history and is often studied as a case study in military planning and special operations. The lessons learned from the operation have informed subsequent military operations and contributed to the development of more effective special operations capabilities within the U.S. military.

Zenith Space Command

Zenith Space Command was a pioneering remote control device for televisions first introduced in the 1950s. It was one of the earliest wireless remote controls for TVs, predating the more common infrared remotes that came later.

Zenith Space Command was a pioneering remote control device for televisions first introduced in the 1950s. It was one of the earliest wireless remote controls for TVs, predating the more common infrared remotes that came later.

The Zenith Space Command used ultrasonic sound waves to send commands to the television set. When a button was pressed on the remote control, it emitted an inaudible ultrasonic signal that the TV could detect and interpret as a command, such as changing the channel or adjusting the volume.

The original Zenith Space Command remote was connected to the television by a cable, so it was not entirely wireless. However, it still allowed viewers to control the TV from a distance, a significant advancement. Before this innovation, viewers had to manually adjust the television's controls or use mechanical tuning knobs.

The Zenith Space Command remotes were groundbreaking and became widely popular in their day. They paved the way for the development of more advanced and sophisticated remote control technologies that followed, including the infrared remotes that are now commonly used for televisions and other electronic devices.

The Car Horn

The history of the car horn dates back to the early days of automobiles, and it has evolved significantly over the years.

The history of the car horn dates back to the early days of automobiles, and it has evolved significantly over the years. Here's a brief overview of its development:

1. Early Horns:

- The earliest automobiles, in the late 19th and early 20th centuries, did not have dedicated horns. Instead, they often used rudimentary warning devices like bells, whistles, or even shouting to signal their presence to pedestrians and other road users.

2. Bulb Horns:

- In the early 1900s, bulb horns became popular. These were hand-squeezed rubber bulbs that forced air through a horn-shaped device, creating a distinctive "honk" sound. They were relatively simple and effective for their time.

3. Electric Horns:

- As automotive technology advanced, electric horns were developed. These horns used electrical currents to produce a louder and more consistent sound compared to bulb horns. They typically consisted of a metal diaphragm that vibrated when an electric current passed through it.

4. Horn Button:

- Early cars often had a horn button located on the steering wheel, which allowed the driver to easily sound the horn. This layout is still common in modern vehicles.

5. Horn Variations:

- Different types of horns were developed over the years, including single-note horns and multi-note horns, each with its distinctive sound. The choice of horn could vary depending on the vehicle's size and intended use.

6. Horn Regulations:

- As road traffic regulations and safety standards evolved, so did regulations regarding vehicle horns. These regulations aimed to ensure that horns were used responsibly and not as a form of noise pollution.

7. Air Horns:

- In larger vehicles like trucks and buses, air horns became popular due to their loud and distinctive sound. These horns used compressed air to create a powerful and attention-grabbing blast.

8. Modern Horns:

- Today's car horns are typically electric horns and are designed to meet specific safety and noise level regulations. They are an essential safety feature, used to alert other road users of the driver's presence and intent.

9. Customization:

- Some car enthusiasts customize their vehicle horns, choosing different tones or musical melodies. However, it's important to note that there are often regulations governing the use of such customized horns to prevent excessive noise.

The car horn has evolved from basic warning devices to sophisticated safety features in modern vehicles. While the technology has changed, the primary purpose of the horn—to signal one's presence and communicate with other road users—remains the same.

ENIAC

ENIAC, which stands for Electronic Numerical Integrator and Computer, was one of the earliest general-purpose electronic computers. It was designed and built during World War II to solve complex mathematical calculations for the United States Army.

ENIAC, which stands for Electronic Numerical Integrator and Computer, was one of the earliest general-purpose electronic computers. It was designed and built during World War II to solve complex mathematical calculations for the United States Army.

Here's a brief history of ENIAC:

1. Development and Construction:

ENIAC was developed by John W. Mauchly and J. Presper Eckert at the Moore School of Electrical Engineering at the University of Pennsylvania. The project started in 1943 with the support of the U.S. Army. Mauchly and Eckert aimed to build a machine that could perform high-speed calculations for artillery trajectory tables.

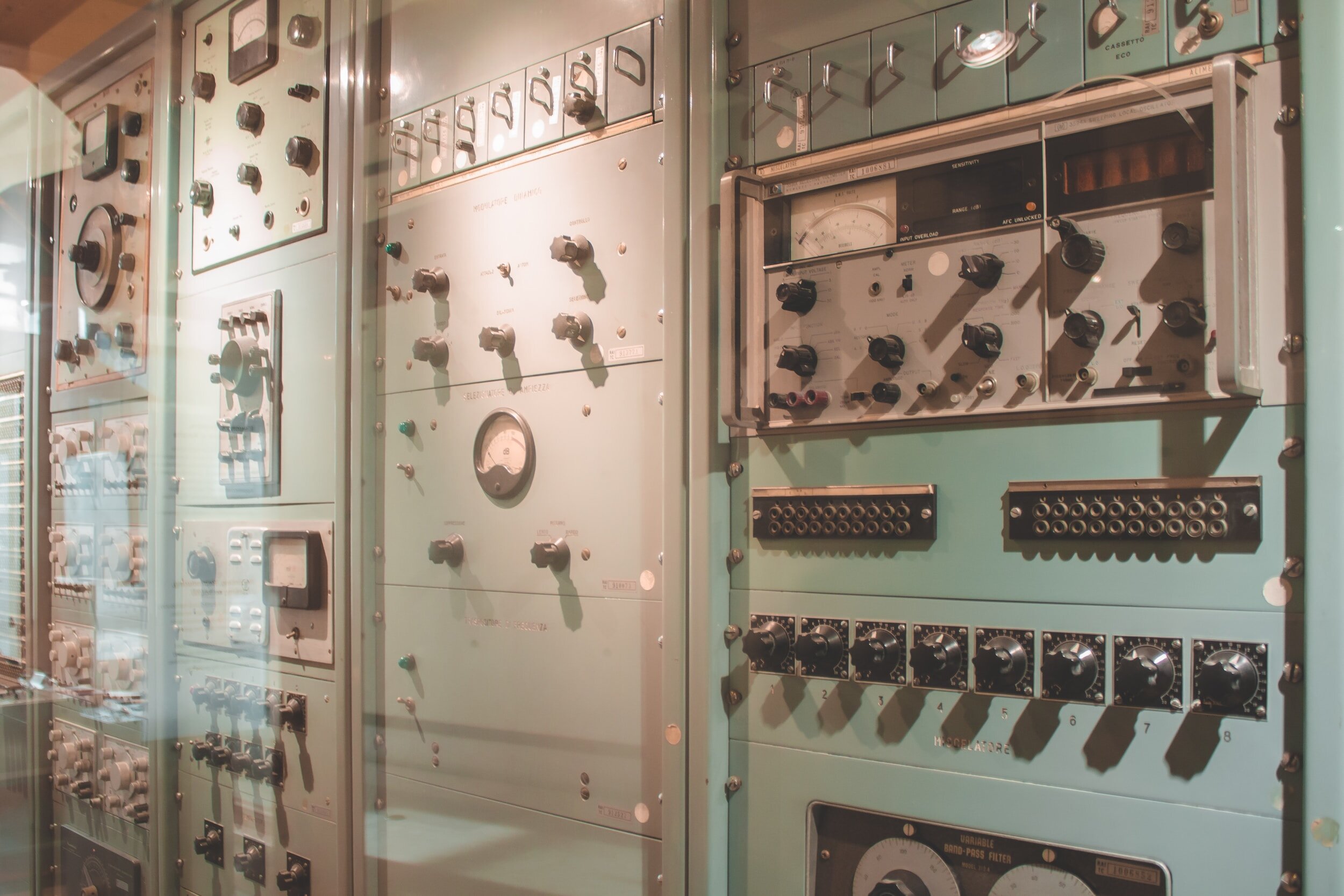

2. Design and Architecture:

ENIAC was a massive computer that occupied a large room, covering approximately 1,800 square feet (167 square meters). It consisted of 40 panels, each 9 feet tall and 2 feet wide, containing over 17,000 vacuum tubes, 70,000 resistors, 10,000 capacitors, and numerous other electronic components.

3. Functionality:

ENIAC was a decimal-based computer, capable of performing addition, subtraction, multiplication, division, and other arithmetic operations. It could also store and manipulate numbers in its internal memory. However, ENIAC was not programmable in the way modern computers are. Instead, it was reprogrammed by physically rewiring its panels and switches.

4. First Operation:

ENIAC became operational in late 1945, and its first successful calculation was performed on December 10, 1945. It computed the trajectory of a projectile, which would have taken around 20 hours using manual methods, in just 30 seconds.

5. Contributions and Impact:

ENIAC played a crucial role in various scientific and military calculations. It was used for a range of tasks, including the development of the hydrogen bomb, weather prediction, atomic energy calculations, and more. Its successful operation marked a significant milestone in the history of computing and set the stage for further advancements in the field.

6. Legacy and Further Developments:

Following the success of ENIAC, Mauchly and Eckert went on to develop the UNIVAC I, the first commercially available computer. This marked the transition from the era of massive, specialized machines like ENIAC to more general-purpose computers that could be used for a wide range of applications.

ENIAC's historical significance lies in its pioneering role as one of the earliest electronic computers, showcasing the potential of electronic computation and laying the foundation for the modern digital era.

The Answering Machine

Today, the answering machine has been replaced with voicemail. Previous generations relied on a physical device to record messages.

Before the Cellphone

Yes, people still use the cellphone to make phone calls! What would the phone be without the ability to ignore calls and send them to voicemail? Today, younger generations take the ability to leave voice messages for granted, but a physical device attached to the home phone was never required to miss that important message. Gone are the days of families gathering around the answering machine trying to record a message since the phone call could be for anyone in the household.

Ross Makes a New Answering Machine Message

A Physical Device

The most common answering machine required a user to attach the device to a phone, insert a blank cassette tape, and record a welcome. When the phone rang, the user had only a few rings to pick up the phone before the answering machine connected. The caller had an opportunity to leave a message, recorded on the cassette tape, and then the owner could play it back. The first answering machine development was a gradual process that dates to the late 19th century. There were several inventors and innovations, but the earliest tangible answering machine did not appear until the early 20th century.

George has the Answer

Telegraphone

One of the earliest attempts at creating an automatic response device was the "Telegraphone," invented by Valdemar Poulsen in 1898. The Telegraphone was designed to record telephone conversations onto a wire, allowing the user to replay later recorded messages. While not precisely an answering machine, it laid the foundation for recording and playing back audio messages. By 1903, Poulsen sold his patent to a group of investors. Forming the American Telegraphone Company of Washington, D.C., the Telegraphone was sold mainly as a scientific instrument. Regarding popularity in the public sector, only two people purchased Telegraphones.

1980s Answering Machine Cassette

Hörzufernsprecher

By the 1930s and 1940s, inventors began working on devices that could record and play back phone messages automatically. The Dictaphone, a wax cylinder recording device, dominated the business recording market, and a working playback phone device would revolutionize business communications. One notable example of an early answering machine is Willy Müller’s "Hörzufernsprecher." Müller created the device in 1935. This device could record messages on 35mm film using magnetic technology. It was a groundbreaking invention, but the machine didn't become widely available due to the technological limitations of the time and the disruption caused by World War II. It was only manufactured and available in Germany.

The "Electronic Secretary"

The first widely recognized commercial answering machine came into existence in the 1950s. Dr. Kazuo Hashimoto, a Japanese-American engineer, invented it in 1954. Hashimoto developed the "Electronic Secretary" device while working at Bell Labs. This machine used magnetic recording tape to store and playback voice messages. The device was hefty and required manual operation, but it marked a significant step forward in developing answering machines.

The Electronic Secretary was principally marketed for businesses and professionals. Still, it paved the way for more compact and user-friendly answering machines that became popular in homes in the 1960s and 1970s. The answering machines of the 1960s and 1970s used cassette tapes and microcassettes to record and play back messages. Some of the more revolutionary features were the tape counter, remote message retrieval system, and adjustable recording time.

Families Recorded Together

Recording the outgoing message on the answering machine became a creative outlet for people in the 1980s. The more outrageous, the better. Celebrities advertised recording the outgoing message for consumers, and companies capitalized on selling innovative tapes to use in the answering machine.

Dangers

The danger of physical answering machines was the unencrypted recording that could be played on any tape deck. Anyone in the house that pushed the button had access to any message. Legally, answering machine tapes were not considered wiretapping and could be used against a person without a specific warrant to record phone conversations.

1980s State of the Art Answering Machine

Digital Machines End an Era

The technology-hungry consumer of the 1980s and 1990s increased the answering machine's popularity as technology improved and prices dropped. Digital answering machines replaced analog ones, and digital answering machines offered better sound quality and more features. The smartphone and digital communication of the 2000s effectively ended the popularity of the traditional answering machine. Landlines have been replaced with cellphones, yet voicemail technology began with early innovations and the need never to miss an important message.

Obituary: How Deaths Became Public Interest

If you were considered a significant person and died in Rome after 59 B.C., your death was subject to be printed in the Acta Diurna, or “Daily Acts.” This daily government gazette, published on papyrus and posted publicly, reported news along with a list of recent deaths.

If you were considered a significant person and died in Rome after 59 B.C., your death was subject to be printed in the Acta Diurna, or “Daily Acts.” This daily government gazette, published on papyrus and posted publicly, reported news along with a list of recent deaths. Created by Julius Caesar, these pamphlets were posted on temples, markets, and public gathering places for the masses to read. Designed to convey information to the public, reading about the deaths of significant individuals became a popular section of the news and appealed to a morbid sense of humanity.

Posting the deaths of individuals became common yet limited in scope as Rome collapsed. With the invention of movable-type printing by Johannes Gutenberg in 1440, the exponential increase in printing also increased the range of information throughout Europe. Listing the deaths of prominent individuals continued to be trendy. Colonial America printed death notices, limited by technology, but by the middle of the 1700s, the imported printing press allowed for local deaths to be published in most newspapers. Still, the limitations of newspaper size allowed the printing of famous or exciting death notices only, and smaller communities were more likely than large cities to print death notices. Even George Washington only received a couple of short paragraphs announcing his death.

As printing technology improved, more comprehensive death notifications became possible. The name of the death postings also varied by newspaper. Titles like “Memorial Advertisements,” “Death Acknowledgements,” and even simply “Died” were some of the section headings. Although printing death announcements remained moderately popular, the American Civil War dramatically changed the popularity of death notices. Around this time, the term “obituary” became more commonplace in newspapers. Obituary is a Latin derivative of obit or death.

During the American Civil War, families of soldiers from the North and the South scoured over newspaper reports of battles. With little official information on the fate of their loved ones or letters arriving home, families wanted as much information as possible, eager for something to give them knowledge. The increase in obituary notices also amplified the bibliographical information to assist families with identifying and spreading the information on the deceased.

After the American Civil War, the obituary classified became popular. People could post succinct obituaries of deceased accomplishments in local papers to announce a local death and inform neighbors of the funeral services. During the Industrial Revolution, obituaries were published with the individual’s wealth to expand readers' interest or to make them feel better about their wealth (or lack of prosperity). This advanced during the late 1800s as newspapers began to print comprehensive accounts of how a person died. The more gruesome, whimsical, or unusual deaths became popularized in what was deemed “death journalism” at the time.

The early 20th-century obituaries were still written with details about an individual’s method of demise but gradually changed to include a condensed life story. Obituaries developed into a tribute to the deceased with poems about their lives. World War I and World War II continued the trend of giving information to the public on soldiers but changed from details on how a person died to simply the location and rank of the soldier. By the 1950s, obituaries transformed again to detail an individual’s accomplishments and announce funeral arrangements for the community.

The late 20th century obituaries continued publishing the recently deceased manner of death but altered morbid details to more of a life story, not unlike more modern equivalents. By the late 20th century, information on an individual’s wealth and manner of death was uncommon, and the “common man” obituaries became popular. Today, newspapers have been replaced by electronic publications and social media. New means of cyber information have changed how people write and receive obituaries, but the trend continues to publish only basic information for funeral services and surviving family names. On the other hand, the famous garner lengthy tributes and detailed life information on their accomplishments in numerous electronic forms. Regrettably, for well-known individuals, websites are now dedicated to wagering when they will meet their demise, and the more macabre death details have returned to popularity.

Cold Drinks on a Warm Day: How Ice Became Available to Everyone

Cold Ice on a Hot Day

As the temperatures rise outside, think of the one place in the house that is always cool - the refrigerator. The home may be hot during summer, but the fridge remains cold. Ice from the refrigerator makes beverages cold and refreshing. Food from the fridge makes meal preparation easy, without running to the store whenever you want fresh food. Many appliances and innovations have made modern life convenient and easy, but the refrigerator is one of the most overlooked inventions we take for granted.

Yakhchāls

The cold of winter provides ice. In the spring, the ice melts. Even ancient civilizations recognized that the cold provided the ability to preserve food during the winter. The problem then became how to make the ice last throughout the year without the technology necessary to convert warm air into cold. A simple solution was the icehouse. Collecting ice during the winter months and storing the ice in underground chambers slowed the melting process. This concept dates to ancient times as a means of preserving food. Utilized in early China and Egypt, these underground ice houses allowed food to remain cold during the hot summer seasons. As far back as 400 BC, the Persians built yakhchāls in the desert. Yakhchāls, or ice pit houses, not only stored ice but were designed to take advantage of the low humidity of the desert and use evaporative cooling to lower the temperature inside.

Common Sense Approach

Collecting ice during the cold months and storing it underground during the warmer months remained the primary means of refrigeration until the 19th century. Ice was traded like lumber or gold during the late 19th century. Technological advancements in transportation created the ability to quickly move ice into warmer climates. Entrepreneurs recognized that ice could be transported from cooler temperatures during the summer and sold to more hospitable locations. The hotter the geographic location, the more profitable and lucrative the ice trade. The self-proclaimed Boston Ice King, Fredrick Tudor, ignited the commercial ice trade in 1806. Hoping to capitalize on wealthy Europeans in the hot Caribbean environment, Tudor harvested ice from New England and transported the ice, along with specially designed ice houses, to the Caribbean. As the profits grew, Tudor expanded the business to deliver ice across the Midwest of the United States, into South America, Australia, and even China. At the peak, almost 100,000 people worked in the commercial ice industry.

Waldon Pond

Fredrick Tudor harvested ice from Waldon Pond. The same Walden Pond was made famous by Henry David Thoreau.

Delivery of Ice

Adding to commercial ice's profitability, manufacturers created consumer iceboxes. The icebox, or ice chest, became a common household appliance in the late 19th century. These ice refrigerators were nothing more than wooden or metal insulated containers. Straw, sawdust, and cork were used as insulation. Ice, still harvested from colder regions, could be added to the box to keep contents cool during the hot summer months. Commercial ice was regularly delivered to consumers like milk delivery.

Artificial Refrigeration

The ability to create artificial refrigeration began in the middle of the 1700s. Vaporized refrigeration systems were invented in 1834, yet another decade would pass before refrigeration technology became possible. The first commercial ice-making machine was designed in the mid-1800s. Recognizing the potential for business use, numerous manufacturers created refrigeration systems and competed for the business market. The first successful automatic refrigerator was created in 1856 by Australian inventor James Harrison. This system used vapor compression to cool the air inside a box and was sold primarily for commercial purposes. What made Harrisons' invention an immediate success, it was invented for the brewing industry to keep beer cold, and soon all breweries wanted one!

Cold Beer

What made Harrisons' invention an immediate success, it was invented for the brewing industry to keep beer cold, and soon all breweries wanted one!

Thomas Midgley Jr.

Only in 1913 did refrigerator technology develop for consumer usage. In 1913 Fred W. Wolf patented the domestic refrigerator, the DOMestic ELectric REfrigerator or DOMELRE. Wolf’s design was a significant step in bringing refrigeration technology into homes and included the first ice tray for freezing water into ice cubes. The unfortunate aspect of this technology was using toxic gases like ammonia and sulfur dioxide as refrigerants. Thomas Midgley Jr., an American engineer, developed Freon. Freon was non-toxic and non-flammable, increasing the safety of refrigerators. The advent of freon made safety no longer a concern. 1923, Frigidaire created a self-contained refrigeration unit, although it was only popular and affordable for business use.

General Electric

The earliest refrigerators were available to the public as early as the 1920s, but the ability to mass produce refrigerators and increase consumer availability by adding electricity did not occur until the 1930s and 1940s. In a 1920s advertisement, General Electric proclaimed that the fridge made it safe to be hungry again! By the 1950s, refrigerator design and price made the appliance affordable and available. This marked a transformative shift in how people stored and preserved food.

Indispensable Appliance

The modern household, dorm room, and apartment would not be complete without the refrigerator. Without the fridge, ice is at the fingertips of every consumer. In addition, the refrigerator changed how food is preserved, the convenience of ready-made meals, and society's overall health. The latest focus of improvements to the fridge is centered around improving efficiency and finding new means of cooling without harmful chemicals. Energy Star ratings and regulations have been implemented to encourage using energy-efficient refrigerators. Today, refrigerators come in various sizes, styles, and features, including side-by-side doors, bottom freezers, and intelligent technology. Some even have advanced features like water and ice dispensers, temperature-controlled compartments, and digital displays.

Zoot Suit Riots

The Zoot Suit Riots were a series of racially charged clashes in Los Angeles, California 1943. The riots involved violence between white servicemen, predominantly sailors and soldiers, and Mexican-American youth who wore distinctive clothing known as "zoot suits."

The Zoot Suit Riots were a series of racially charged clashes in Los Angeles, California 1943. The riots involved violence between white servicemen, predominantly sailors and soldiers, and Mexican-American youth who wore distinctive clothing known as "zoot suits." A "zoot suit" is a men's clothing style popularized during the 1940s. Oversized and exaggerated proportions characterize it. It typically features a long, drape-cut jacket with wide lapels, high-waisted, baggy trousers, a long-chain pocket watch, a fedora hat, and often a wide, brightly colored tie.

During the 1940s, World War II was in full swing, and Los Angeles saw a significant influx of military personnel due to its strategic military installations and defense industries. The city also had a large Mexican-American population, many facing discrimination and social challenges. Zoot suits, popular among young Mexican-Americans, were flamboyant and characterized by wide-legged trousers, long coats with padded shoulders, and oversized fedora hats.

The tensions between white service members and Mexican-American youths escalated in June 1943 when a series of confrontations began. The catalyst for the riots was an incident on May 31, 1943, known as the "Sleepy Lagoon Murder." A young Mexican-American man named José Díaz was found dead near a reservoir. Despite lacking evidence, several Mexican-American youths were arrested and wrongfully convicted for the crime.

In response to this incident, a group of sailors allegedly targeted Mexican Americans, especially those wearing zoot suits, blaming them for the murder and other perceived social issues. The attacks on Mexican-American youth wearing zoot suits became more frequent, leading to violence and unrest.

The violence peaked on June 3, 1943, when a mob of several thousand servicemen and civilians roamed the streets of Los Angeles, looking for Mexican-American youths to attack. They targeted and beat anyone wearing a zoot suit and sometimes stripped the victims of their clothing. The police initially did little to intervene, which led to further chaos and lawlessness.

The Zoot Suit Riots resulted in numerous injuries and arrests, primarily affecting Mexican-American youths. While some white rioters faced consequences for their actions, the overall response from law enforcement and the media was criticized for being biased toward the white service members.

In the aftermath of the riots, the authorities arrested hundreds of Mexican-American youths, many of whom were not directly involved in the violence. This further deepened the sense of injustice and racial tension in the community.

The Zoot Suit Riots brought attention to the issue of racial discrimination and unequal treatment of minority communities, particularly Mexican-Americans, in the United States. It also highlighted the role of the media in shaping public perceptions and influencing public opinion during times of social unrest.

In subsequent years, the Zoot Suit Riots symbolized resistance and unity within the Mexican-American community. The incident also catalyzed civil rights activism, raising awareness about the need for equal rights and social justice for all Americans, regardless of ethnicity or background.